What the Heck are Automated Tests?

By: Brad

Hey Brad we are being asked to write automated tests with every code change, this is just more effort which is slowing us down and I don’t see the value. What the heck are automated tests for?

I hear you, you spend all this effort making your changes and verifying they are working only to then have to go back and write a bunch of automated tests because you were told you should; what a bother.

The thing is automated tests are a tool to help you and the next developer who comes along and needs to make changes in that part of the code.

There is an excellent article by Martin Fowler on automated tests or as he cleverly puts it self testing code, where he talks about an observation he had when looking at hardware. Any one who has ever worked with electronics could tell you that on a lot of bench equipment such as oscilloscopes or signal generators there is a button labeled Test. These types of equipment often need calibration and if they are not working you could end up troubleshooting your system for quite a while. To help provide confidence that the equipment is working as expected they tend to have a set of self diagnostics which you can kick off if you start to suspect equipment malfunction.

In the article Martin Fowler muses that it would be nice to have that level of self diagnostic capabilities for software; a way to know if the odd behaviour was something you just did or if the other parts of the software are not working as expected.

Automated Tests is your software’s self diagnostics

Proper automated tests can provide multiple services to benefit software developers:

- They help you stop fearing your code

- They act as living documentation

- They help you design a better interface

- They help you test the untestable

Lose the Fear

How many times have you heard “We can’t change that or we might break something else” or “We shouldn’t touch that because it is working now and we don’t want to break it”?

I’ve heard those words and other similar uttered many times in my career and it all stems from one place in my opinion: Fear.

The fear of breaking working code is a very real thing; when your boss asks for a new feature the last thing you want to do is break other unrelated features in the process so when a change request comes in for a particularly hairy part of the code the natural reaction is to say it can’t be done or that we would be better off green fielding the project. When you get your self into this predicament the sad reality is that something has gone horribly wrong because the most important thing about good software is that it is soft, that is easy to change. When software becomes to hard to change it does not matter that it is working now the fact is that it won’t work later thus it is not working software.

A good solution to code fear is to use a tool which will have your back and let you know when you start breaking things.

Automated tests are your safety net which allow you to make fearless changes.

Automated tests are your first line of defence against unintentional behavioural changes. They do not replace a good QA team; however, as it is commonly known the longer it takes to find a bug the more costly it is to fix it and you can’t expect your QA team to be able to check for unintentional changes each time you hit save; that is something best suited for a machine.

Computers are fast, really fast and they are good at repetition. That means they can rerun the same set of tests over and over again extremely fast. On one project I was own I had over 8000 tests which ran automatically each time you compiled the code locally. I was able to do this because the whole suite took less then a second to complete. What this meant was instead of me making changes, be happy enough with them to commit them, then wait 24hrs or more for my QA team to point out I broke features A, B, and C while adding new feature X I could simply press F5 to compile my changes as I went and be told immediately that I broke something. The super small feed back loop meant that it was very cheap for me to fix the bugs I was unintentionally writing, I fixed them as I wrote them.

The result of this was that I stopped fearing my code, I could make changes with impunity without fear that I’d break anything because I knew I had the tools to catch the mistakes as they happened.

Refactor is no longer a 4 letter word

I could refactor great big swatches of code to clean up the technical debt and improve the over all code structure. I could replace or update deprecated modules and adding new features no longer consisted of copy-n-paste because I could refactor existing code to make it more accepting to change.

In short with a proper suite of automated tests with an acceptable level of coverage you no longer have to be afraid of the code. You can start adapting quickly to market needs, avoid customer facing bugs, and reduce the over all maintenance cost of your software over time; and you’ll have a whole lot more fun to boot.

Living Documentation

Have you ever joined a project, started looking at a bit of code and thought how is thing being used? Do you ever turn to a user manual or Readme only to find it lacking examples or that the examples are out of date or incorrect?

Automated tests provide live code examples of how a bit of code is to be used. If the use of the code changes and the tests are not updated then the example is wrong but the tests are also failing so you know they are wrong. If you setup your process so that failing tests must be addressed then it forces you to always update your documentation; that is you have to update your tests to make them pass again thus they as an example of how to use the code will always be correct.

Automated tests document the expected response for different inputs

You might not always look at the tests to understand how the code should work because you might have access to the code in question and don’t mind doing a little spelunking but the day you don’t have access to the code or all the cave diving in the world can’t explain what inputs are required you can always look up the tests and see what they are doing.

Better Design Through Tests

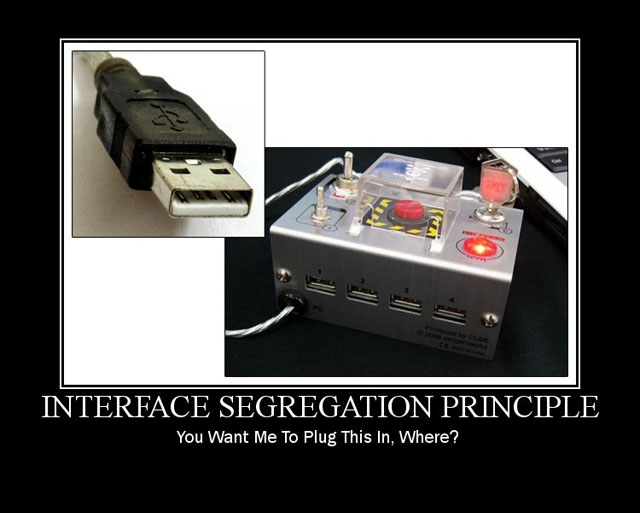

Have you ever been given a bit of code and found it really hard to use? I mean you are given a class but to instantiate it you have to pass it pretty much the entire world and the kitchen sink? Or maybe the class or module in question has an API you want to use but you have to pass it a dozen or so unrelated parameters for it to work?

ISP is one of the S.O.L.I.D. principles which states that interfaces should be fine grained and are client specific; or in other words easy to use.

For me ISP goes back to SRP in that things should have only a single reason to change; a large and hard to use interface will likely have multiple change vectors.

Automated Tests are your first user of your code

When implementing code the interface may start off simple then grow and grow and grow. When you are implementing it you can loose track of that either by simply not suffering trying to use your code or you only had to suffer once then forgot about it, you acclimate to the pain and move on. However if you write automated tests you will switch mode from implementer to consumer and have to start acknowledging the ease of use of the code your writing.

If it is hard to write tests then it is going to be hard to use

If you end up having to stub out a dozen or so dependencies then in the real world consumers of your code will have to instantiate a dozen or so dependencies which might be hard or unnecessary.

One time when I was practicing TDD as I was writing tests I started to discover that not only were my dependencies growing they were not being used uniformly.

I was creating a class, I asked my self what does this class need to do? I wrote a test for each case and as I walked through writing the tests I thought about what dependencies each case was going to have to rely on and added them via constructor injection. By the time I finished covering all the cases I looked at my constructor and noticed that half my tests needed dependency A and the other half needed dependency B, there was no cases where both dependency was needed.

The act of trying to use my class showed me that I would end up with consumers artificially pulling in a dependency they didn’t need if they only needed half the operations on the class which was a likely scenario. I was in fact writing two classes not one so I split the tests and implemented two separate classes. Without the tests it would have taken longer for me to realize this if at all.

What about Test Induced Damage?

If you have not heard of this there is an post by a fellow named DHH where he outlines that automated tests lead to poor and/or costly design. There is a video series where Martin Fowler, Kent Beck and DHH have a debate on the subject which I highly recommend (or read this summary). While I’ll give DHH that improper tests or to much stubbing can lead to damage however the smell there is less about automated testing and TDD in general and more about improper application of design practices. Some of his points fly in the face of proper separation of concerns stating that the changes to the code just to allow it to be test-able damages the design. I however feel that again if it is hard to test then it is hard to use or change. Other complaints he had was about excessive indirection just to allow for stubbing. On one hand I disagree and that the use of hard coded globals (singletons) makes code harder to adapt to changes in market needs but on the other hand I agree that excessive stubbing can make tests brittle but that is probably more related to testing code vs. testing behaviour.

Again I recommend the video series, it is fair and balance and is so engaging it got me yelling at my monitor the whole time.

Test the Untest-able

How many times have you pushed code and see it work only to be told by QA that it just crashed. How many times was that due to an unhandled exception?

There is a term I’ve hard used recently called Happy Path Testing, it is where only the cases where things work get tested while error handling cases get ignored. Often the crashes are caused due to improper error handling because it is hard to induce failures in dependencies.

When implementing code which depends on other code you need to handle the cases where the other code can fail but how does one induce a failure in the dependency?

If the failure can be triggered by providing faulty input then that is pretty easy: input the bad data and ensure your code handles the error. Same goes if the error case is when the dependency is absent: shutdown the dependency and again ensure your code is handling it. But what if the error case is harder to induce? What if the only way to induce it is to follow a series of complicated steps and what if those steps have to be re-run each time? The likely hood of you performing the Sad Path Testing each time you make a change is slim isn’t it?

Automated Tests can test the Sad Paths

Now not all forms of automated tests can help here but if we talk about Unit Tests specifically then we have the option to mock the dependency with a fake version of it which we can hard code to generate the failure condition.

With automated unit tests we can mock out dependencies so that we can test all the possible response cases to ensure we are handling them correctly. This allows us to be confidant that our code works not only in the Happy Path but also in the Sad Path.

This does not negate the need for a QA team as a mock is only as good as the known expected responses and they don’t always stay in sync with the dependency they are mocking, that is why integration and system tests are still important, but they provide a much quicker feed back loop that lets you verify that your sad path code works before passing over to QA.

The benefit here is that QA does not need to spend as much time testing all the known cases and can spend more time testing the unexpected ones.

Exploratory testing, it is what humans do best

So that is what automated tests are all about, they are tools to shorten feed back loops so that issues can be fixed sooner and cheaper, they remove the fear so you can make changes boldly, they document your code in a way that is always up to date, they help you identify poorly designed code by acting as the first consumer of the code, and they help you test all the code branches to avoid nasty surprises once you go live.

In the long run the benefit of these tools will more then outweigh the extra effort to write them in the first place. If the tests are restricted to testing behaviour they won’t need maintaining as you change the code; only when you change the behaviour. The time spent hunting down bugs introduced weeks ago will be gone as most of them would be caught by the test suite. The time spent manually regression testing the entire application would be a thing of the past as their is more confidence in the teams ability to change code without breaking everything. The time waiting to be told something broke will be reduced to seconds as the tests are far faster at testing your code then any human.

Automated tests are a powerful tool in your arsenal use them but use them wisely, good tests can make or break a product but bad tests can be worse then any customer facing defect. Bad tests erode trust; they erode the trust in any of the tests and they erode trust in your developers ability to keep the product viable in the market and thus they can erode trust in your product. When that happens budgets shrink and companies lose trust in our profession as a whole.

So practice, practice writing tests, practice refactoring code with tests. Figure out what tests help and how to spot poorly written tests. The better you are at using tests as a tool the more performant you will become and the more valuable and relevant your product and your company can be.

Until next time think imaginatively and design creatively